AI guardrails for your content standards

The most comprehensive suite of content governance solutions to make sure your AI-generated writing is compliant with your standards.

Discover howHow content governance from Acrolinx uses AI guardrails

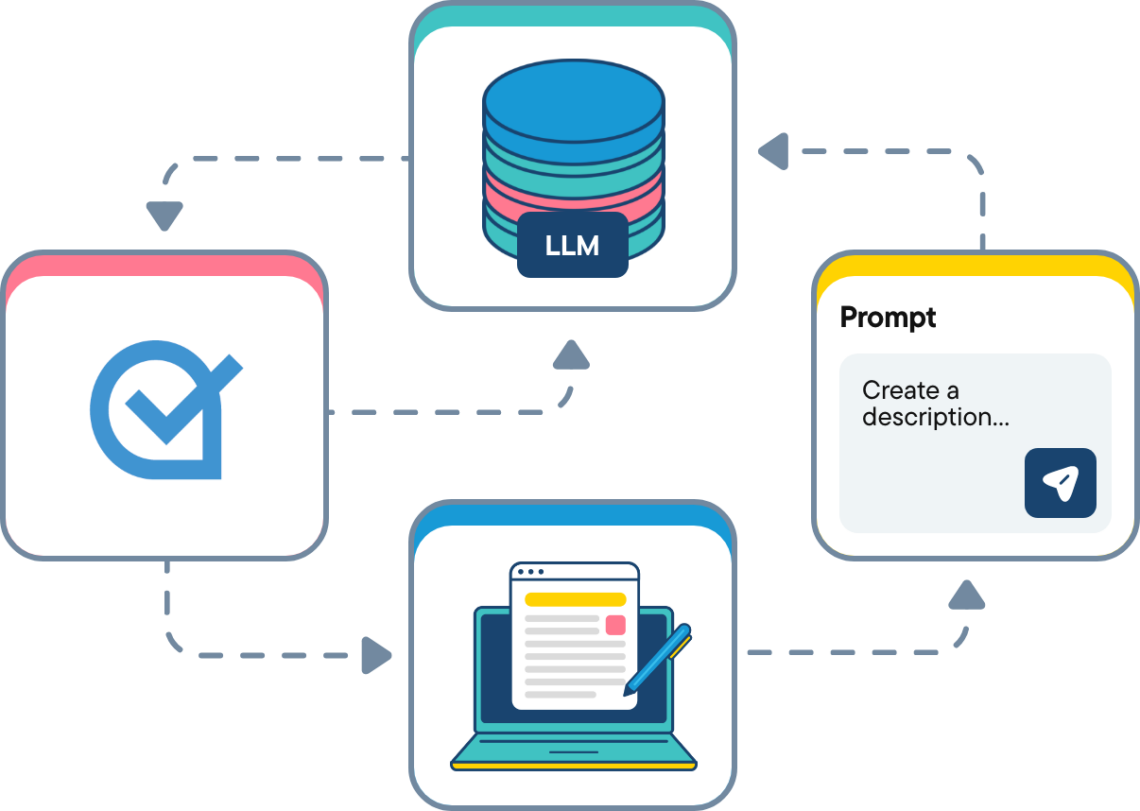

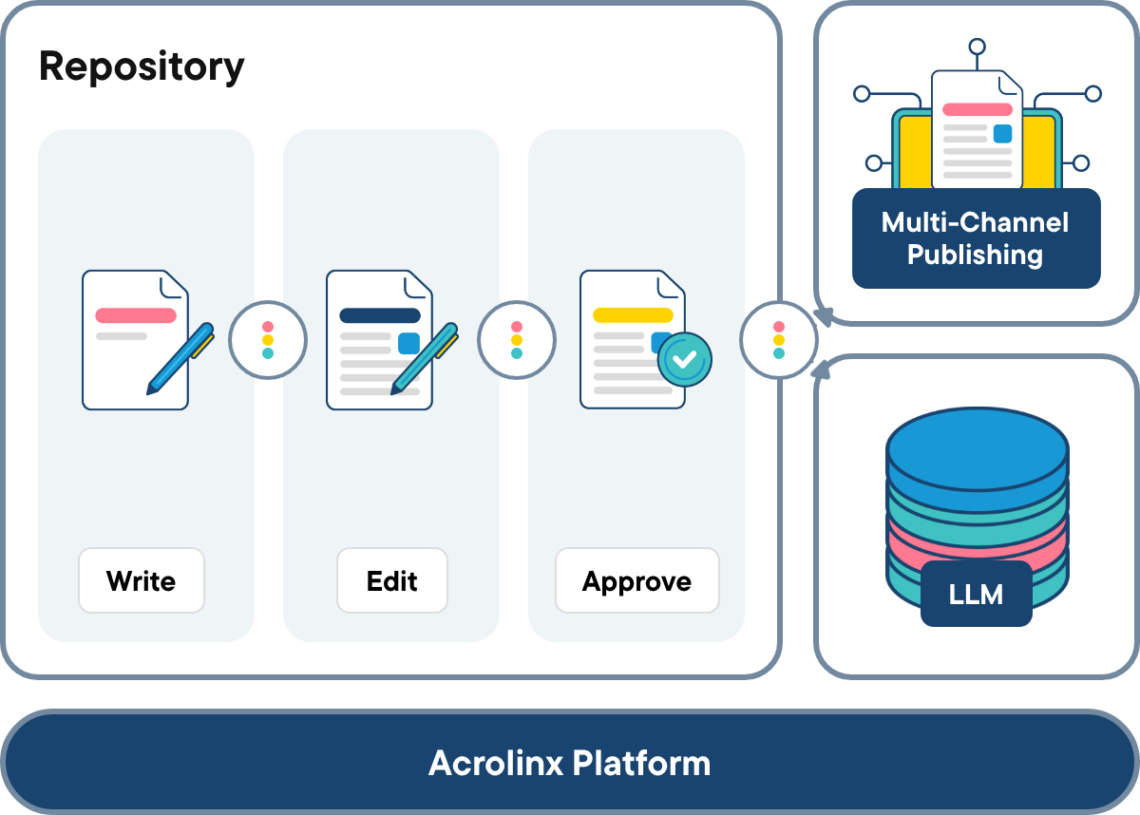

Regardless of how you’re deploying generative AI in your organization, Acrolinx’s automated content governance and editorial writing assistance mesh with your technology stack to ensure the quality of:

Large Language Model (LLM) Fine-Tuning Content

LLM Generated Content

Editorial Writing Assistance

Content in Development

Published Content

How Acrolinx AI guardrails result in high-quality and compliant content

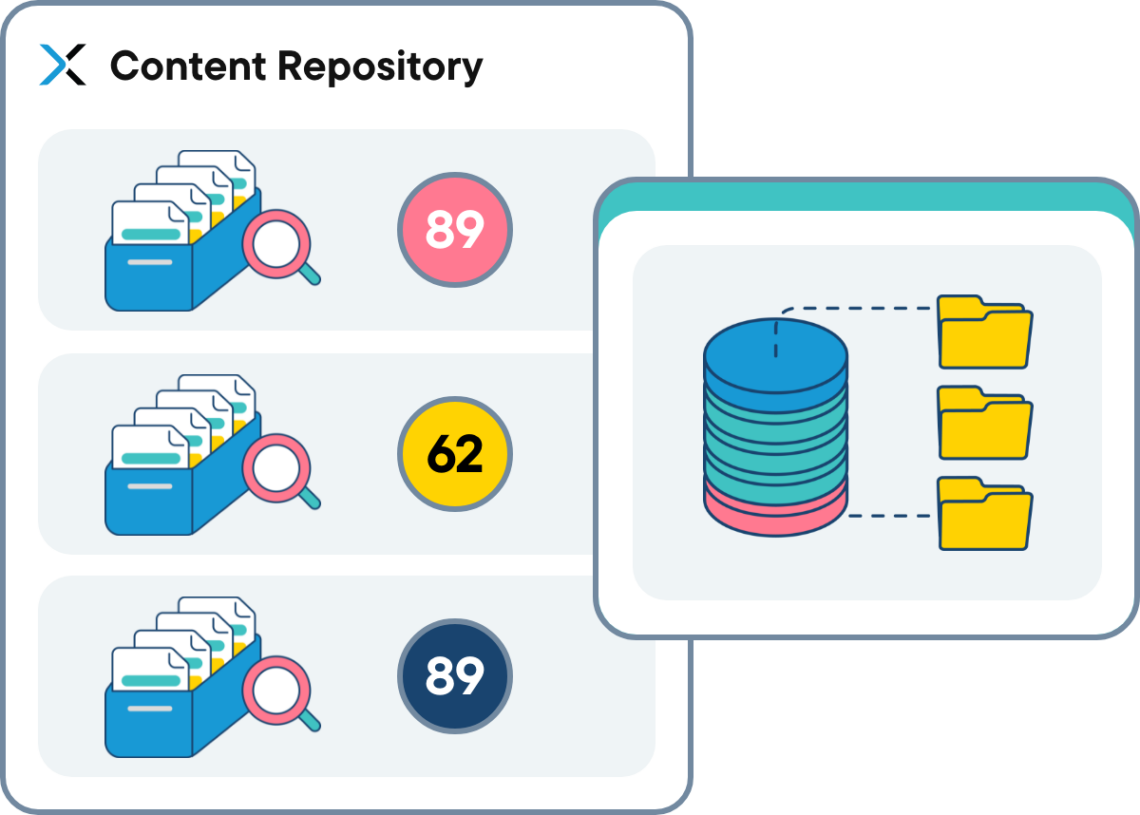

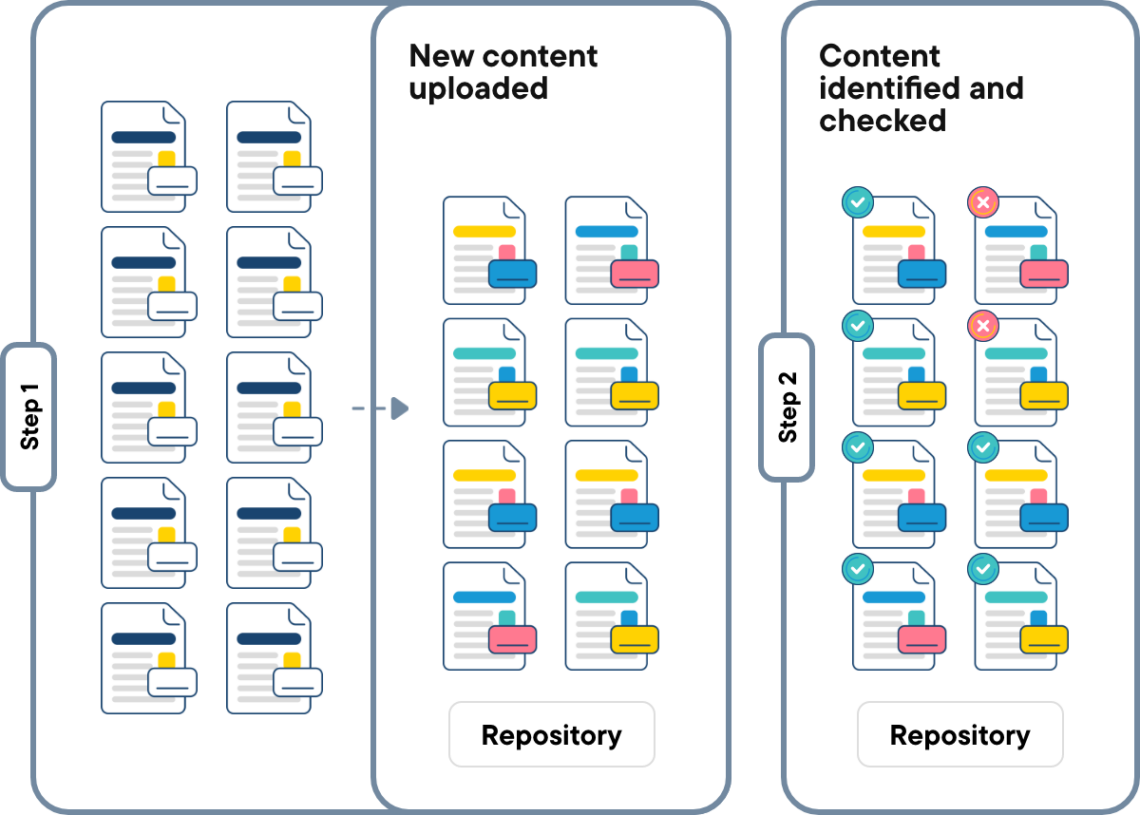

Quality assure content used for Fine-Tuning

Quality content in means quality content out. With content quality assurance, you make sure the content your business uses to fine-tune your LLMs meets enterprise quality standards. This drastically improves your model’s output.

Quality assure generated LLM completions

Check the quality of the content your LLM generates – before your writers even see it. Acrolinx integrates into your generative AI workflows to check, score, and improve content as it’s generated. This makes sure content follows enterprise style guides and writing standards.

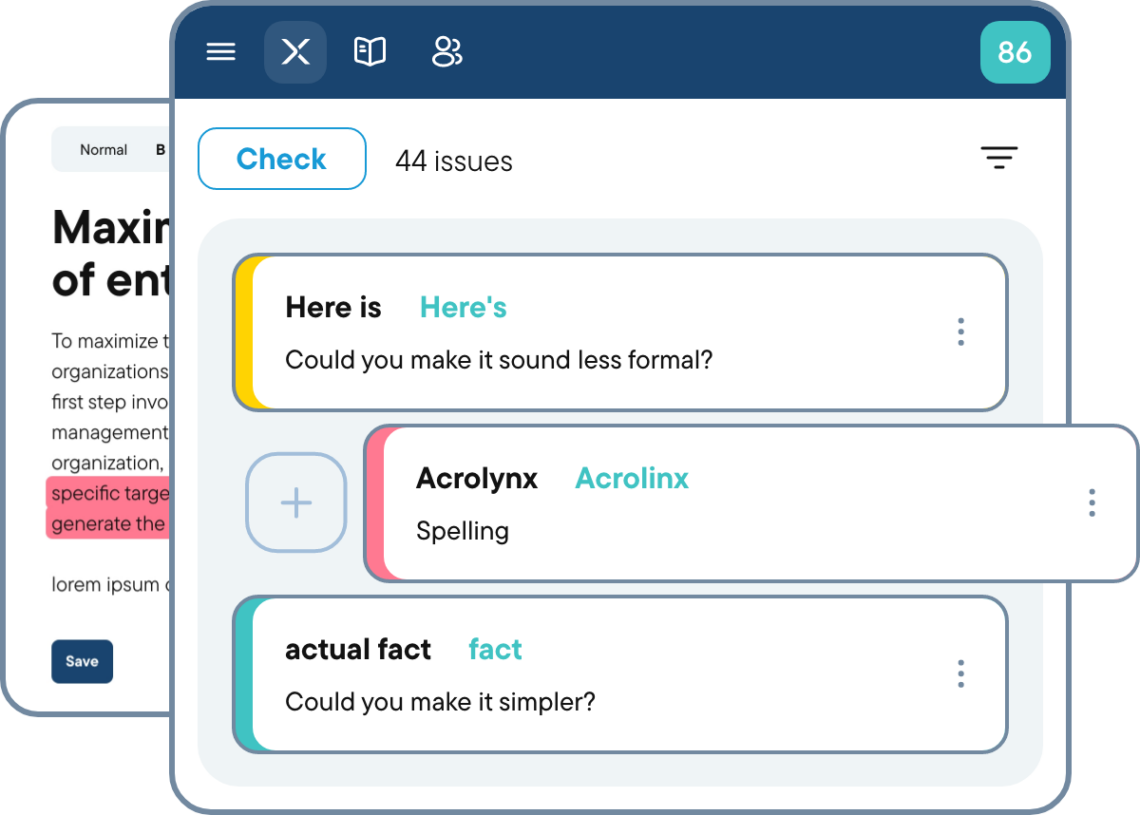

Editorial writing assistance

Provide writers with editorial guidance directly within the applications they use every day. Use our sidebar to instantly check human and AI-generated content to maintain content quality and enterprise writing standards.

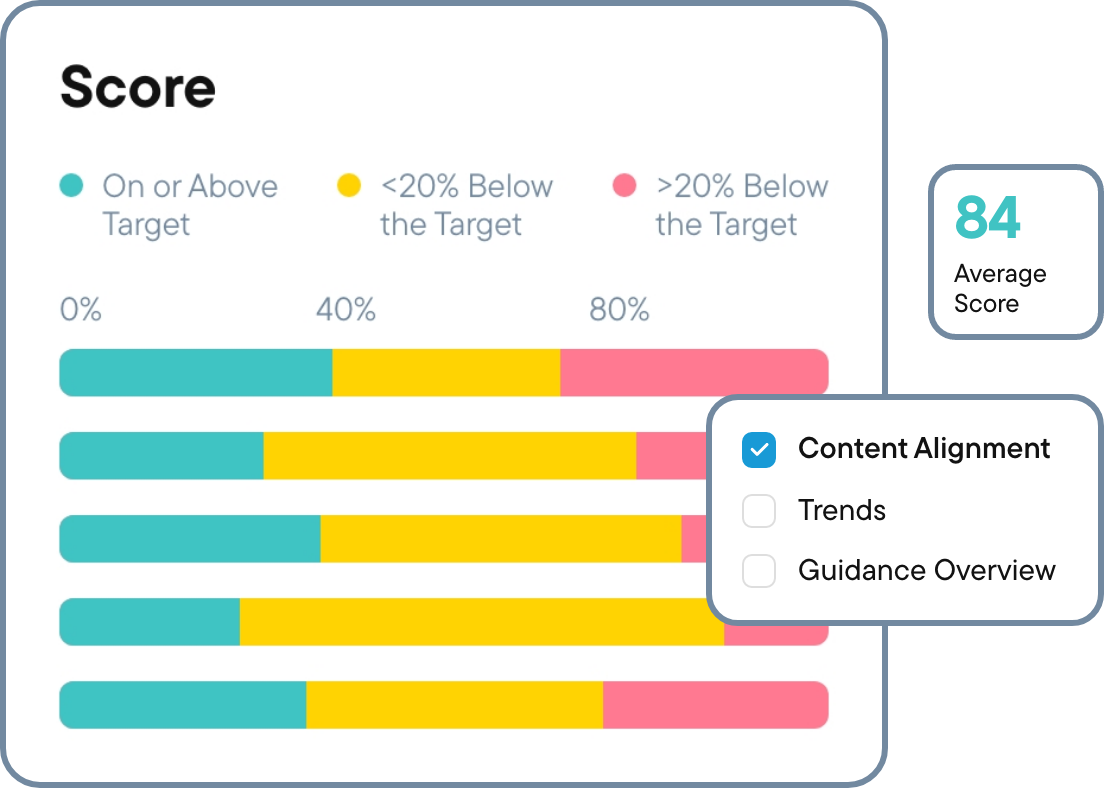

Automated quality gates

Integrate Acrolinx into your content workflows to check and score the quality of content automatically before it’s published. This makes sure only high-quality content is published while low-quality content is held for editorial review.

Maintain the quality of published content

Writing standards change, be it due to regulation changes, new product releases, mergers, acquisitions, or some other event.

Acrolinx continually checks the quality of published content and identifies content that no longer meets enterprise writing standards. This saves you the painstaking effort of having to review content manually.

AI guardrails help prevent abuse and harmful content

Our partnership with Microsoft Azure provides you with built-in LLM content filtering. For you, this means your LLM technology blocks the generation of offensive, abusive, hateful, and explicit content.

Hate

Blocking the generation of distasteful and disrespectful content

Explicit

Blocking the generation of sexually suggestive and offensive content

Harm

Blocking the generation of self-inflicted injury, illegal substances or abuse

Violence

Blocking the generation of graphic depictions of assault and battery